As artificial intelligence advances, the distinction between technological progress and ethical limits diminishes. One of the most debated trends in recent years is the emergence of so-called “undressing AI” software. Muke AI Undress has become one of the most hotly debated tools in this category. The rise of muke ai undress highlights growing concerns, as it can create non-consensual manipulated pictures with the help of AI. Is this a technological achievement or an infringement on human dignity and privacy?

This blog addresses the ethical, legal, and technological concerns surrounding Muke AI Undress. We delve into how it works, why it is problematic, and what society needs to do about the increasing abuse of AI in such profoundly intimate and sensitive scenarios.

What is Muke AI Undress?

Users say Muke AI Undress uses artificial intelligence to digitally strip clothes from images of individuals. Similar to other “deep fake” products, it uses generative AI models, frequently trained on enormous databases of images of humans, to generate extremely realistic but artificial visual material.

These programs insist that they work off next-generation algorithms capable of foreseeing skin colors, body types, and shapes concealed under garments—all from a single, clothed photo. Some might deem this the sheer strength of AI-generated imagery, yet the consequences of employing such solutions without permission are most troubling.

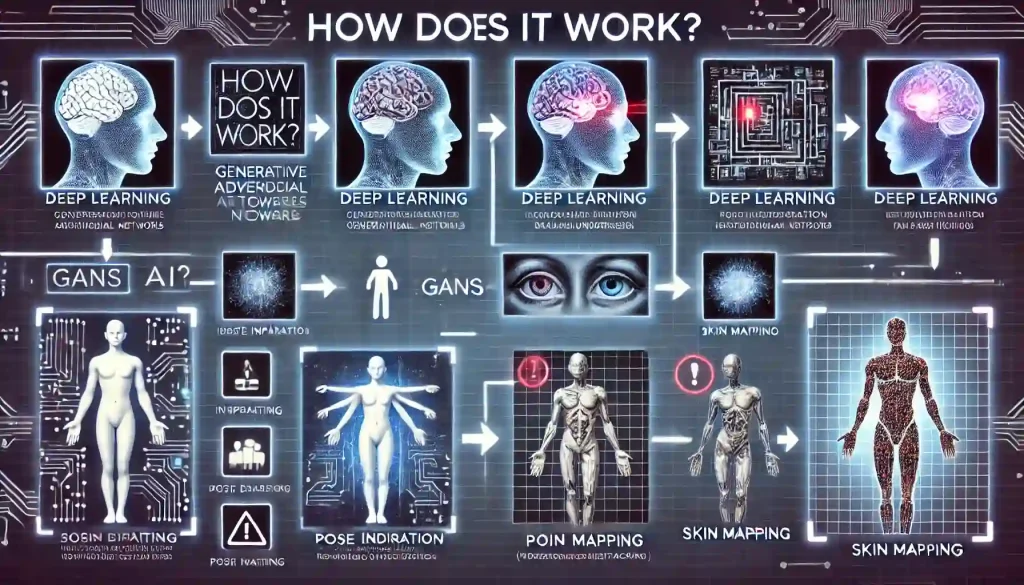

How Does It Work?

Although developers have not disclosed the actual architecture of muke ai undress due to its unofficial and unethical nature, they presumably base its process on the following technologies:

Generative Adversarial Networks (GANs): These networks create new images that are close approximations of real photographs.

Deep Learning Models: Learned thousands (even millions) of body images in order to make inferences about hidden body parts.

Image Inpainting: A technique for filling in missing pieces of an image, usually applied to restore old photos or eliminate objects.

Pose Estimation & Skin Mapping: Employed to match human poses and replicate realistic body forms.

AI creates results that may look real but are artificial, leading to serious consequences if people use them for malicious purposes.

The Ethical Dilemma

A basic ethical concern fuels the Muke AI Undress uproar: Should people use AI to produce unauthorized images of individuals? The response, for the majority of ethicists and privacy rights activists, is an emphatic no.

Main Ethical Issues:

Breaching Consent: Subjects are unaware that their pictures are being manipulated.

Objectification and Exploitation: Such tools are responsible for the sexualization and human depersonalization of individuals.

Digital Harassment: Results of such tools are usually employed for revenge, blackmail, or cyberbullying.

Psychological Damage: Deepfake content victims can suffer from trauma, anxiety, and reputational harm.

Even when users employ such tools ‘for fun’ or with adult consent, they still open the door to abuse, especially when targeting unsuspecting victims.

Legal Environment Globally

Most governments are finally realizing the threat represented by deepfake and stripping AI technologies. While laws differ, the direction of criminalizing such content is picking up momentum.

Countries Acting

United States: Certain states, such as California and Virginia, have enacted laws criminalizing the sharing of non-consensual deep fake pornography.

United Kingdom: The Online Safety Act contains provisions against intimate image abuse, such as digitally manipulated content.

South Korea: Dissemination or possession of non-consensual deepfakes is criminalized under law.

European Union: The EU’s proposed AI Act would introduce strict controls on high-risk AI applications such as those manipulating human images.

While authorities are making efforts, enforcing the rules remains tricky, especially since users can anonymously deploy tools like Muke AI Undress and share them through dark web platforms or encrypted messaging apps.

Real-World Impact

The social and psychological impact of these devices cannot be exaggerated. Victims of manipulated undressed pictures usually experience:

Reputational damage: Particularly if the pictures are distributed in their neighborhood or place of work.

Mental trauma: Anxiety, depression, and PTSD are regularly cited.

Loss of control: Victims feel helpless as their online representation is misused.

Challenges in pursuing justice: Victims find it hard to establish harm or identify the perpetrators.

For public figures, influencers, and even children, the impact can be that much more ruinous.

Top Expert Opinions

Dr. Emily Renner, Digital Ethics Researcher:

“Developers and users of Muke AI Undress and similar tools exploit AI innovation for harmful purposes, embodying its worst aspects. The harm they inflict far exceeds any entertainment or novelty.”

Alex Kim, Cybersecurity Lawyer:

“Even if these tools are framed as ‘entertainment,’ they raise serious legal threats. Consent, privacy, and digital integrity are not up for debate in any ethical system.”

Why Are These Tools So Popular?

In spite of the controversy, tools such as Muke AI Undress have become popular for several reasons:

Anonymity: People can usually use these tools without giving identity information.

Accessibility: Most tools are available for free or distributed through underground forums.

Curiosity Factor: Novelty attracts some users who do not realize the ethical considerations.

Lack of Awareness: Most are not aware that these images are viewed as a type of digital abuse.

This is a call for universal digital literacy and ethical AI education.

What Can Be Done?

It’s apparent that to combat the abuse of resources such as muke ai undress will involve a multi-faceted strategy using technology, law, education, and personal responsibility.

Suggested Solutions

AI Watermarking: Pledge developers to include tamper-proof digital watermarks on images created by AI.

Platform Accountability: Make platforms and forums liable for hosting or sharing such material.

Education Campaigns: Educate students and the general population on digital consent and AI ethics.

Global Legislation: Advocate a single global code of conduct combating AI abuse.

Victim Support Systems: Give legal and psychological assistance to victims of deepfake exploitation.

A Tech Perspective: Can the Tech Be Repurposed?

Interestingly, the underlying technology in applications such as Muke AI Undress is not necessarily evil in nature. Generative AI has good uses as well:

Medical Imaging: AI inpainting to reconstruct faulty scans.

Art Restoration: Reconstruction of missing parts of ancient paintings.

Content Generation: Gap filling in drawings, storyboarding, and animation.

This again supports that technology per se is neutral—it’s what we do with it that gives it a value.

Last Thoughts: Innovation vs. Exploitation

The debate regarding muke ai undress is more about the nature of responsibility for the use of AI than anything else. That the technology used is remarkable should not distract one from the ethical violations of non-consensual output.

We must ask ourselves: Do we want to live in a world where people alter anyone’s image for entertainment or exploitation? Or do we come together to harness AI in ways that empower, uplift, and drive responsible innovation?

The future of AI isn’t only in the code—it’s in our decisions.

Read More: From Text to Art: Discovering the Enchantment of Gening AI